Lessons From Building With AI Agents: 120k Lines of Code Later

In the previous article, I described my first steps in building an MVP application, completely with AI agents.

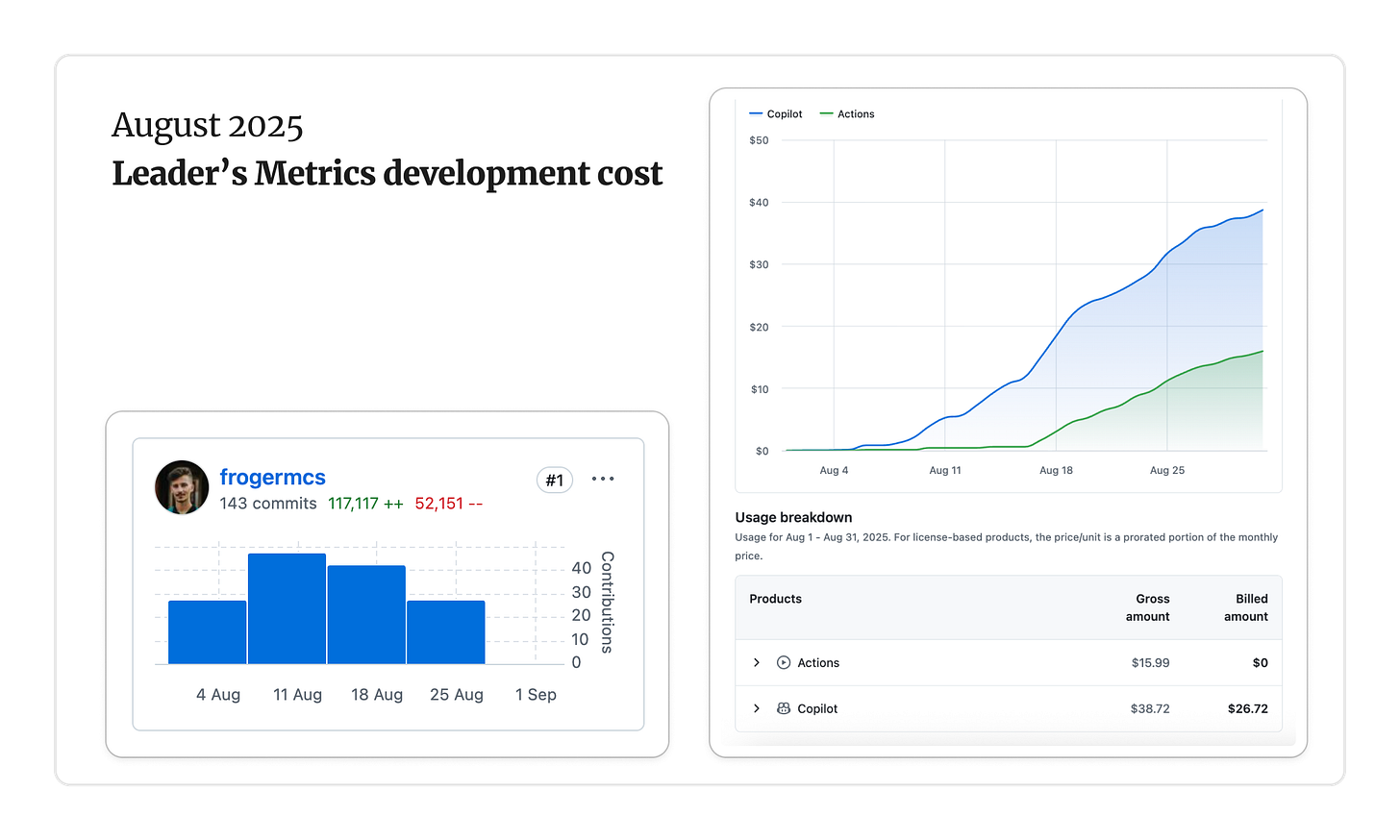

Here’s where I stand today:

120k+ LoC written 99% by AI agents

73 closed pull requests reviewed by another AI agent

Almost 30 major tasks shipped, many split into smaller feature branches

Total cost: ~$56 (AI agentic work + GitHub Actions for lint/test/build)

I built Leader’s Metrics to improve my work as an engineering leader. I wanted a place where I could easily track my own goals (I usually have dozens of them) and, soon, track my team’s health in a structured way.

But the journey itself became just as valuable as the app. Most engineers I lead will soon work in AI-assisted environments, moving at a faster pace than ever. I don’t want that ride to be a crash course for me — I want to be ready to guide them.

Here are the lessons I took from this experience, written as much for myself as for other leaders preparing for the same shift.

Random order, random thoughts - some will stay, others will change in the future for sure.

1. Don’t Start From Scratch

AI agents thrive in well-defined environments. If you leave gaps, they’ll fill them — but not always in the way you want.

For example, adopting the shadcn design system let me move quickly. Instead of wasting cycles on reinventing buttons and navigation, AI focused on building business logic. I shipped useful features much faster.

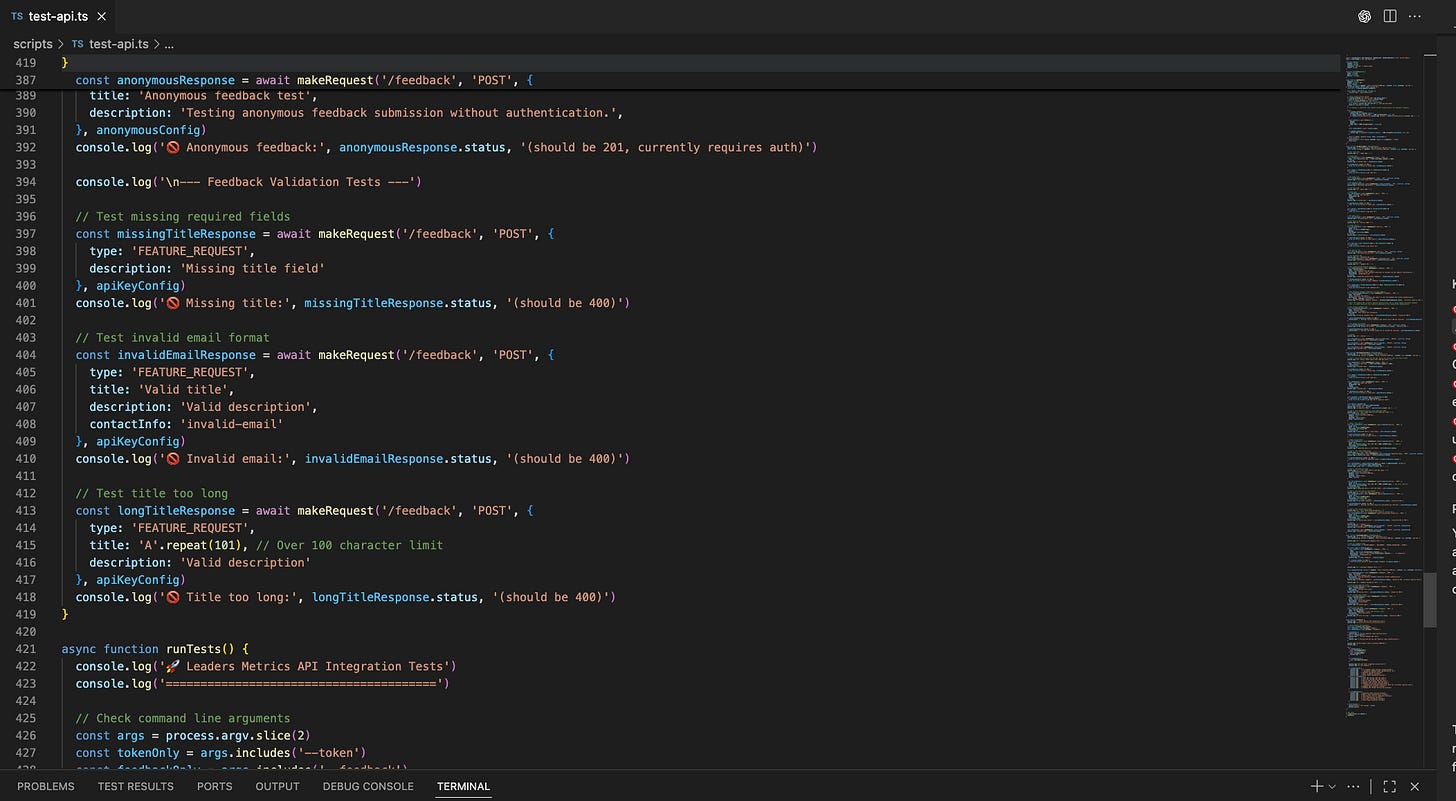

Where I hadn’t set guardrails, though, AI improvised. Lacking a clear integration testing setup, it invented independent Node scripts to test features. That was clever in the beginning, but the solution didn’t scale.

Takeaway: Define your stack, building blocks, and processes up front. The more you constrain the environment, the more leverage you get.

2. Standards Are Emerging — But Still Fragile

The standards for instructing AI agents are still shaky.

Files like AGENTS.md, CLAUDE.md, or copilot-instructions.md are supposed to guide agents consistently.

In full-agentic work (like Codex CLI or Codex Cloud), I rely on them. But in local development (VS Code + Copilot Agent), I often find myself writing instructions manually:

“Execute task (name-of-the-file.md), while following guidance from DEVELOPMENT_WORKFLOW.md, keeping standards as outlined in ARCHITECTURE.md and testing principles in TESTING_PRINCIPLES.md.”

That’s redundant, but necessary — because the agents don’t always “see” those standards on their own.

It’s a similar story with MCP servers. In theory, I could use GitHub MCP to fetch an issue description and base agentic work on that. In practice, it’s unpredictable: sometimes it fetches the issue, other times it dumps the entire issue list. To avoid wasting tokens and context space, I usually just paste the content myself.

Takeaway: Standards like AGENTS.md and MCPs will matter a lot in the future, but for now, don’t assume they’ll work flawlessly. Be prepared to supplement AI agents with explicit instructions and specify the exact files.

3. Build for Rollback, Not Just Speed

In AI-assisted development, it’s not enough to ship quickly — you also need to undo quickly.

Thanks to Vercel’s deployment process, I can push to production in under 10 minutes. But the real value comes from how easy it is to roll things back:

When I prototype something new, I put it in a dedicated directory (e.g.

/early-prototypes) with all its logic self-contained. If the experiment fails, I just delete the directory and the app is back to normal.Every new feature ships with seed data and a demo account, which reset every 1–24 hours. That way I can test aggressively without worrying about breaking real flows.

In the future, I’ll add feature flags, rollback pipelines, and staged rollouts — but even the simple structure I use now saves me headaches.

Takeaway: AI accelerates delivery, but without kill switches, removable prototypes, and regenerating data, you risk chaos. Build systems that let you pull back as fast as you push forward.

4. Expect Non-Determinism

Even with perfect prompts, AI can still drift.

When I integrated Auth.js, I provided the entire documentation as markdown. I thought it was bulletproof. Instead, the agent hallucinated a few fields in the DB model. The integration failed silently, and I spent an hour debugging something I assumed was too obvious to check.

Takeaway: AI is powerful, but not predictable. Even for “obvious” steps, verify the output. Don’t outsource your trust.

5. The Four Types of AI Work

Not all engineering tasks are equal. Over time, I realized there are four distinct categories of AI-assisted work.

What makes them different isn’t just scope — it’s how much context you can give, how tight the constraints are, and what testing tells you.

1. Implementation – building something new

This is the broadest category: UI, API, tests, docs, and business logic all in one. Here, context and constraints are everything. If the spec is fuzzy, AI will wander. If the DoD, architecture, and testing principles are precise, it delivers surprisingly well.

Example: when I asked for “goal management” as one feature, the agent mashed everything together and tests were sloppy. When I split it into create, edit, and delete as separate tasks, the results were much sharper: cleaner code, clearer tests, better separation of concerns.

Expect: ~80% of work complete on first try, ~20% refinement.

Key: keep tasks small and self-contained, with explicit test cases.

The entire article with 12 lessons from my work is available only for paid subscribers.

You can use the training budget (here’s a slide for your HR).

Thanks for supporting Practical Engineering Management!