AI Is Everywhere, But Trust in It Is Nowhere

Five leadership moves for the AI adoption paradox

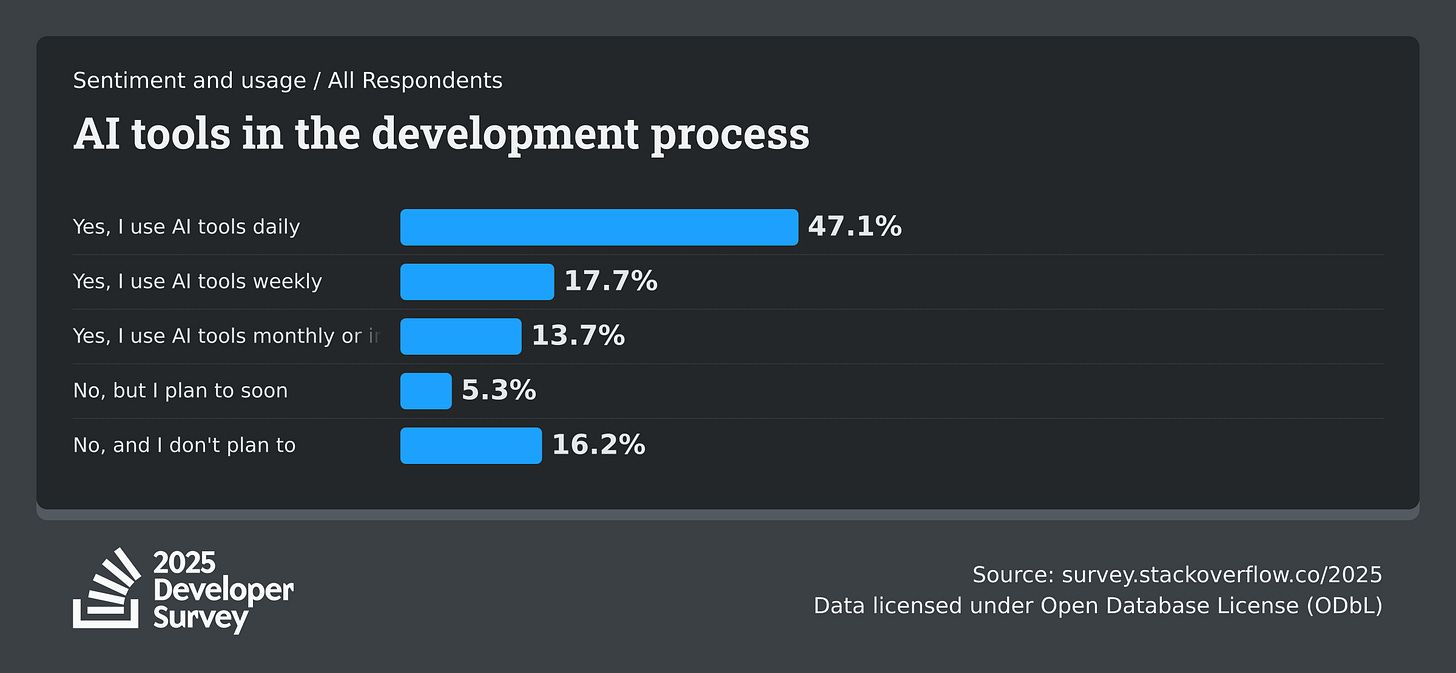

The 2025 Developer Survey from Stack Overflow is out.

Its sections on developer demographics and technology trends are always worth reading — they shape your hiring strategies and tech stack evolution.

But the AI-trends section? That’s the one senior leadership keeps asking you about.

Many of you told me that “adopt AI in our work” is now a top priority from your executives.

Let’s dive in!

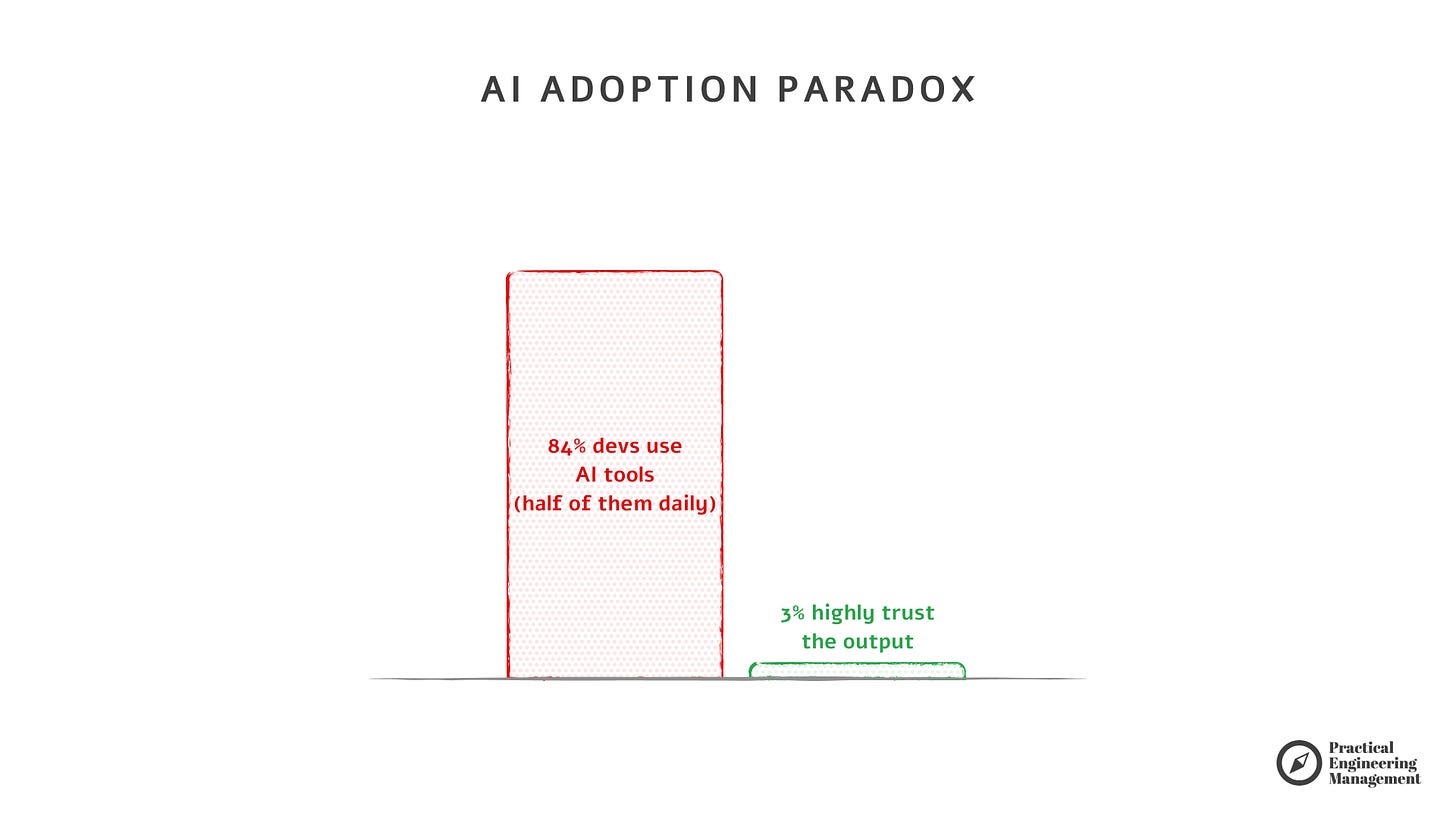

Here’s the paradox:

In 2025, AI tools are nearly universal.

84% of developers use them, and half use them daily.

But the real problem 👉 only 3% of engineers say they highly trust the output.

Adoption is high. Confidence is collapsing.

If your only AI strategy is to buy Copilot licenses for everyone, you won’t stand out anymore.

This isn’t about whether your team uses AI — they already do.

It’s about figuring out what to do when the tool suggests something “almost right,” and your engineers spend an hour untangling it.

Let’s walk through five patterns from the Stack Overflow 2025 Survey that should reshape your approach:

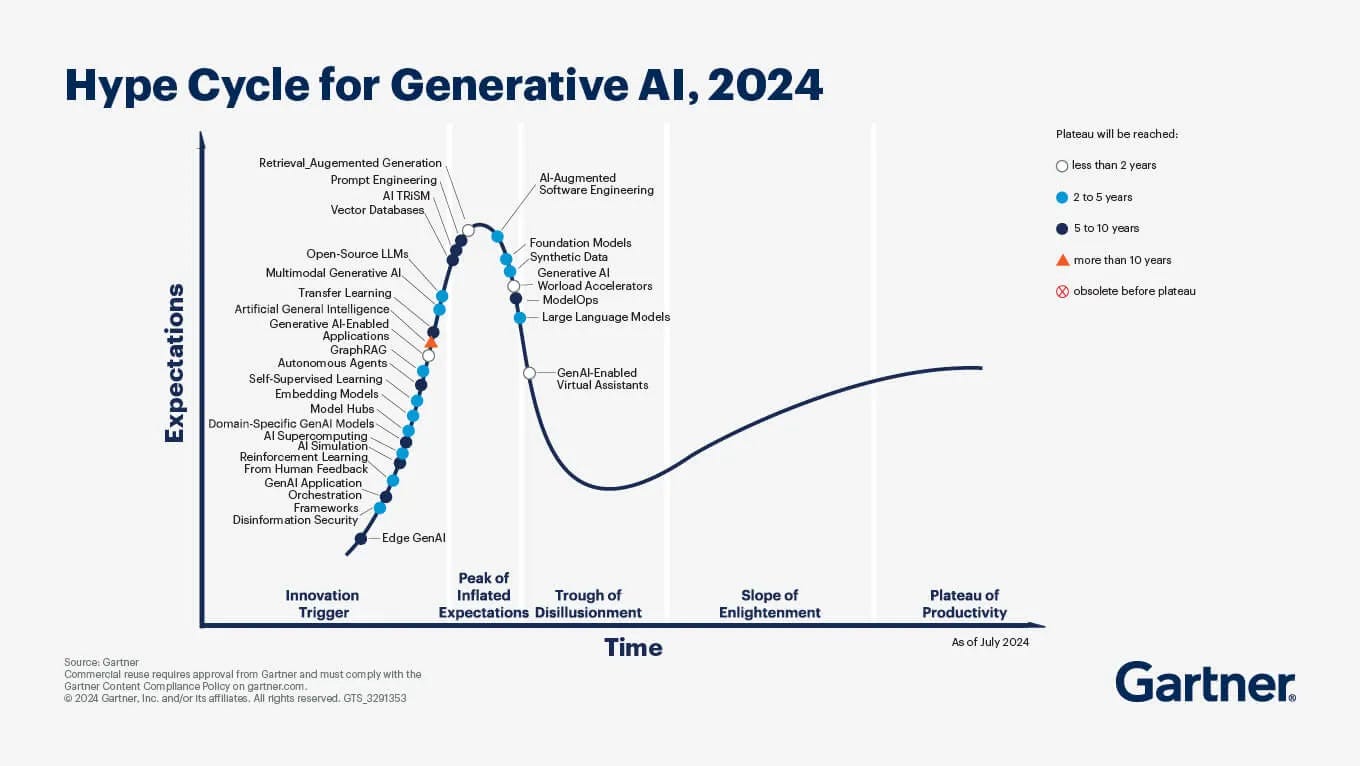

1. Everyone uses AI — but trust is eroding

Developers are pragmatic. They’ll try new tools.

But they also know when they’re being burned.

Sentiment toward AI tools has cooled:

over 70% of developers were favorable toward them in 2023–2024.

This year, it’s only about 60%.

More developers now distrust AI output (46%) than trust it (33%).

Just 3% “highly trust” the results.

We can generate dozens of tests in minutes — but if your codebase is messy, most will be junk.

We can produce pages of text instantly — but if only one paragraph has real value, it’s just noise.

Productivity was never about how much you produce, but how much value you deliver.

Try this

Track not just how much AI your team uses, but also what the value is.

Here's my flow these days:

Track AI usage (e.g. GitHub Copilot’s Usage API). Are developers using it daily? Did usage drop off? Why?

Ask power users and occasional users about their workflows. Compare their results.

Look for patterns by seniority — less experienced engineers often struggle more with AI.

Measure impact: has AI improved code quality, review speed, or delivery stability?

Supplement with a short internal survey to capture sentiment and use cases.

From my experience, people with lower software engineering craftsmanship (seniority, fluency in design patterns, architecture, programming paradigms) struggle the most with AI.

DORA research says that AI brings both: positive impact (code quality), and negative impact (delivery stability).

Extra read: Why ‘AI-Built Apps in Minutes’ Is a Lie

The entire article and PDF/Notion/doc templates are available only for paid subscribers. You can use the training budget (here’s a slide for your HR).

Thanks for supporting Practical Engineering Management!